Merge branch 'foss42:main' into application-dashbot-udhay

1

assets/api_server.json

Normal file

1

assets/files.json

Normal file

1

assets/generate.json

Normal file

@ -0,0 +1,68 @@

|

||||

# GSoC Proposal: API Explorer for APIDash

|

||||

|

||||

## About

|

||||

|

||||

- **Full Name:** Chinmay Joshi

|

||||

- **Email:** chinmayjoshi003@gmail.com

|

||||

- **Phone:** +91 9028900343

|

||||

- **Discord Handle:** _chinmay03

|

||||

- **GitHub Profile:** [GitHub](https://github.com/chinmayjoshi03)

|

||||

- **LinkedIn:** [LinkedIn](https://www.linkedin.com/in/chinmay-joshi-34115827b)

|

||||

- **Time Zone:** Asia/Kolkata (IST)

|

||||

- **Resume:** [Resume Link](https://drive.google.com/file/d/1_sE_Mi1iUyENXDcKyqHO22hs4ey_HvJr/view?usp=drive_link)

|

||||

|

||||

## University Info

|

||||

|

||||

- **University Name:** Savitribai Phule Pune University

|

||||

- **Program:** Bachelor of Engineering (IT)

|

||||

- **Year:** 2nd Year (2025 Batch)

|

||||

- **Expected Graduation Date:** 2027

|

||||

|

||||

## Motivation & Past Experience

|

||||

|

||||

### 1. FOSS Contributions

|

||||

I haven't contributed to FOSS projects yet, but I recently downloaded the APIDash codebase to my local machine and started exploring it to understand its structure and functionality.

|

||||

|

||||

### 2. Proud Achievement

|

||||

One of my proudest achievements is the **KissanYukt project**—a mobile app developed for farmers to connect directly with consumers, eliminating middlemen and ensuring fair pricing. This project led us to victory in the **Smart India Hackathon**, showcasing our ability to develop impactful solutions for real-world problems.

|

||||

|

||||

### 3. Challenges that Motivate Me

|

||||

I enjoy solving problems that require creativity, logical thinking, and a direct impact on people’s lives. Whether it’s optimizing a process, improving user experience, or automating repetitive tasks, I find motivation in challenges that push me to learn, adapt, and innovate.

|

||||

|

||||

### 4. GSoC Commitment

|

||||

I will be working part-time on GSoC, as I am a 2nd-year student and need to balance my studies alongside the project.

|

||||

|

||||

### 5. Syncing with Mentors

|

||||

Yes, I am open to regular sync-ups with project mentors to ensure steady progress.

|

||||

|

||||

### 6. Interest in APIDash

|

||||

APIDash stands out because of its lightweight, Flutter-based architecture, making it a highly efficient alternative to tools like Postman. I am particularly excited about the potential of expanding its modular design, enhancing API discovery, and integrating AI-based automation for better API management.

|

||||

|

||||

### 7. Project Improvements

|

||||

While APIDash provides a great developer experience, some areas for improvement include:

|

||||

- Improving UI responsiveness on lower-end devices.

|

||||

- Expanding API import/export options for better interoperability.

|

||||

- Enhancing API security validation and error handling mechanisms.

|

||||

|

||||

## Project Proposal Information

|

||||

|

||||

### Proposal Title: API Explorer for APIDash

|

||||

|

||||

### Abstract

|

||||

This project aims to enhance the APIDash user experience by integrating a curated library of popular and publicly available APIs. The feature will allow users to discover, browse, search, and import API endpoints into their workspace for seamless testing. It will include pre-configured API request templates with authentication details, sample payloads, and expected responses, reducing manual setup time.

|

||||

|

||||

## Weekly Timeline

|

||||

|

||||

| **Week** | **Focus** | **Key Deliverables & Achievements** |

|

||||

|-------------|--------------------------------------|-------------------------------------|

|

||||

| **Week 1-2** | Community Bonding & Planning | Engage with the APIDash team, review the existing codebase, and gather insights. Define project scope, objectives, and a clear technical roadmap. **Outcome:** Detailed project plan and initial architecture design. |

|

||||

| **Week 3-4** | API Parsing & Categorization | Develop a backend parser to extract API endpoints, request methods, and metadata from OpenAPI/HTML files. Implement an auto-tagging mechanism to categorize APIs effectively. **Outcome:** A functional parser that outputs structured API data. |

|

||||

| **Week 5-6** | Data Enrichment & Template Generation | Build the automation pipeline to enrich parsed API data—adding details like sample payloads, authentication requirements, and expected responses. Generate pre-configured API request templates automatically. **Outcome:** End-to-end pipeline for creating API templates. |

|

||||

| **Week 7-8** | UI Integration & Search Functionality | Integrate the backend pipeline with APIDash’s frontend. Develop a user-friendly interface for browsing, searching, and importing APIs into the workspace. **Outcome:** Interactive API Explorer UI with smooth API discovery and import features. |

|

||||

| **Week 9-10**| Community Features & Optimization | Implement optional community features such as user ratings and reviews, and enhance GitHub integration for community contributions. Fine-tune performance and usability based on user feedback. **Outcome:** A more engaging and optimized tool for API discovery. |

|

||||

| **Week 11-12**| Testing, Documentation & Finalization | Conduct comprehensive end-to-end testing, resolve any issues, and prepare detailed documentation and user guides. **Outcome:** A robust, well-documented API Explorer ready for deployment and community use. |

|

||||

|

||||

## Conclusion

|

||||

The **API Explorer for APIDash** project aims to enhance user experience by providing a seamless way to discover, browse, and integrate publicly available APIs. By automating API parsing, categorization, and enrichment, the project will reduce onboarding time and improve efficiency for developers.

|

||||

|

||||

This project aligns well with my skills and interests, and I am eager to contribute to the APIDash ecosystem through this project.

|

||||

@ -0,0 +1,95 @@

|

||||

# API Dash - GSoC 2025 Proposal

|

||||

|

||||

## About

|

||||

|

||||

1. Full Name: Jenny(An-Chieh) Cheng

|

||||

2. Contact info (email, phone, etc.): jennyc28@uci.edu

|

||||

3. Discord handle: jennyyyy0954

|

||||

4. GitHub profile link: https://github.com/Jennyyyy0212

|

||||

5. LinkedIn: https://www.linkedin.com/in/an-chieh-cheng/

|

||||

6. Time zone: Pacific Time / Los Angles (GMT-7)

|

||||

7. Link to a resume: [Link](https://drive.google.com/file/d/1wN6gdueKzCLeDX9STjcd0X0T4oS76mKl/view?usp=sharing>)

|

||||

|

||||

## University Info

|

||||

|

||||

1. University name: University of California, Irvine

|

||||

2. Program you are enrolled in (Degree & Major/Minor): Master in Software Engineering

|

||||

3. Year: 2024

|

||||

5. Expected graduation date: Dec 2025

|

||||

|

||||

## Motivation & Past Experience

|

||||

|

||||

Short answers to the following questions (Add relevant links wherever you can):

|

||||

1. Have you worked on or contributed to a FOSS project before? Can you attach repo links or relevant PRs?

|

||||

|

||||

No, but I am eager to start contributing.

|

||||

2. What is your one project/achievement that you are most proud of? Why?

|

||||

|

||||

One project I’m most proud of is a [Movie Tracking application](https://github.com/Jennyyyy0212/MovieLog) that I developed on my own. It involved APIs creation, frontend development, and database management. This project was challenging and rewarding because I had to build everything from scratch, which required me to understand and implement each part from designing the UI to creating APIs and managing database.

|

||||

|

||||

3. What kind of problems or challenges motivate you the most to solve them?

|

||||

|

||||

Those require creative thinking, automate workflows, and improve user experience.

|

||||

|

||||

4. Will you be working on GSoC full-time? In case not, what will you be studying or working on while working on the project?

|

||||

|

||||

Yes, I will work on GSoC for full-time.

|

||||

|

||||

5. Do you mind regularly syncing up with the project mentors?

|

||||

|

||||

No, I don't mind. I am happy to sync up with the mentors and get feedback from them.

|

||||

|

||||

6. What interests you the most about API Dash?

|

||||

|

||||

I'm interested in API Dash because of its ability to integrate API creation and testing. It serves as an open-source alternative to tools like Postman and Insomnia. Especially, it aligns with my interest in improving developer workflows by providing a clean interface and efficient API management. The test preview feature also makes it a valuable tool for developers.

|

||||

|

||||

7. Can you mention some areas where the project can be improved?

|

||||

|

||||

- Integrate to VS code or some IDE as extension

|

||||

- Allow more custom themes or layout in UI, such as dark theme

|

||||

- Enable users to schedule or automate APIs testing (ex: daily, weekly, or after code change) instead of manually testing APIs

|

||||

|

||||

|

||||

|

||||

## Project Proposal Information

|

||||

|

||||

### 1.Proposal Title

|

||||

|

||||

API Explorer - Library for API templates

|

||||

|

||||

### 2.Abstract

|

||||

|

||||

This project aims to help the APIs creation where users easily discover, browse, search, and import popular, publicly available APIs. Developers will be able to quickly access pre-configured API templates with authentication details, sample data, and expected responses. So they don't need to manually set them up. The APIs will be organized into categories like AI, finance, weather, and social media, making it easy for users to find what they need. The backend will automate the process of parsing OpenAPI and HTML files, tagging APIs to relevant categories, enriching the data, and creating templates. Features like user ratings, reviews, and community contributions via GitHub will help keep the resources up to date and relevant.

|

||||

|

||||

### 3.Detailed Description

|

||||

#### Problem Statement

|

||||

Currently, developers using API Dash only when they have their APIs. Creating APIs or searching public APIs manually can take time and configuring requests often involves mistakes, especially for those new to the service.

|

||||

|

||||

#### Proposed Solution

|

||||

- Parses API documentation (OpenAPI YAML, or HTML) to automatically extract key components like endpoints, authentication methods, parameters, and responses.

|

||||

- Generates pre-configured API request templates (GET, POST, PUT, DELETE) with details and neccessary infomation

|

||||

- Categorizes APIs based on functionality

|

||||

- Provides a search and filter system to help developers quickly find APIs by category, name, or tags.

|

||||

- Support user feedback features, so users can rate and comment

|

||||

- Imports APIs directly into the workspace, reducing setup time and improving the workflow for developers.

|

||||

- Fetch the lastest APIs periodically

|

||||

|

||||

|

||||

### 4.Weekly Timeline

|

||||

| Week | Goals/Activities | Deliverables |

|

||||

|------|-------------------------------------------------------------|-----------------------------------------------------------------|

|

||||

| 1 | Set up project environment and repositories. Familiarize with API Dash architecture. Define project requirements and scope. Begin early design concepts for the UI/UX. | Project repository initialized. Environment and tools set up. Project scope and requirements document. Initial UI/UX design concepts. |

|

||||

| 2 | Research OpenAPI and HTML parsing libraries. Choose parsing tools and libraries. Continue refining UI/UX design based on project goals. | List of tools and libraries for parsing and scraping APIs. Refined UI/UX design wireframes. |

|

||||

| 3 | Begin implementing OpenAPI parsing functionality. Set up the database schema for API metadata. Finalize initial UI/UX wireframes and layout design. | Basic OpenAPI parsing for extracting endpoints, parameters. Initial database schema design for storing API info. Finalized UI/UX wireframes. |

|

||||

| 4 | Complete OpenAPI parser and test with sample files. Begin categorization of APIs based on keywords. Start designing the UI components for the API Explorer interface. | OpenAPI parser code with test results. Categorization logic for APIs. Early-stage UI component designs. |

|

||||

| 5 | Start implementing HTML scraper for APIs without OpenAPI. Test parser with real-world API documentation. Iterate on UI/UX design with feedback from initial API interface mockups. | HTML scraping tool for parsing API documentation. Test results from HTML scraping. UI/UX design iteration based on feedback. |

|

||||

| 6 | Implement enrichment features (authentication, versioning, etc.). Develop automatic categorization based on descriptions. Continue refining UI/UX design, focusing on API explorer features. | Enrichment logic for API metadata. Automated categorization model or keyword-based system. Refined UI/UX design for API explorer features. |

|

||||

| 7 | Start template generation for API requests (GET, POST). Add sample payloads and expected responses to templates. Continue refining UI components and user flow. | Template generation code for API requests. Sample request/response templates for testing. Refined UI components and user flow design. |

|

||||

| 8 | Refine template generation for all API methods (PUT, DELETE). Implement authentication template generation (e.g., API keys). Finalize the UI/UX design for the entire API Explorer workflow. | Complete set of API request templates. Authentication logic integrated into templates. Finalized UI/UX design for API Explorer. |

|

||||

| 9 | Begin integration of user feedback system (ratings, reviews). Test user feedback system with a sample set of APIs. Start implementing UI for user feedback and ratings. | User feedback feature integrated into API Dash. UI for user ratings and reviews. |

|

||||

| 10 | Implement API search and filter functionality. Integrate categorized and enriched APIs into the workspace. Finalize the UI for the search/filter and API results display. | Search and filter system for APIs. API Explorer interface integrated with categorized APIs. Finalized UI for search and results display. |

|

||||

| 11 | Finalize real-time testing features (API execution in UI). Perform end-to-end testing and debugging. Conduct user testing for UI/UX feedback. | Real-time API testing feature completed. Final bug fixes and refinements. User feedback on UI/UX design. |

|

||||

| 12 | Conduct final testing and gather feedback. Prepare documentation for the project. Deploy and release API Explorer feature. Finalize UI/UX design adjustments based on testing feedback. | Final version of API Explorer feature. Complete documentation (API usage, setup, etc.). API Explorer released and deployed. Finalized UI/UX design. |

|

||||

|

||||

|

||||

|

||||

167

doc/proposals/2025/gsoc/Application_Mrudul Killedar_DashBot.md

Normal file

@ -0,0 +1,167 @@

|

||||

# GSOC Proposal for DashBot

|

||||

|

||||

## About Me

|

||||

**Full Name:** Mrudul Killedar

|

||||

**Email:** mrudulkilledar111@gmail.com

|

||||

**Phone:** +91 7489685683

|

||||

**Discord Handle:** Mrudul (username: mk4104)

|

||||

**GitHub Profile:** https://github.com/Mrudul111

|

||||

**LinkedIn:** www.linkedin.com/in/mrudul-killedar-5b0121245

|

||||

**Time Zone:** GMT + 5:30

|

||||

**Resume:** https://drive.google.com/file/d/1ICvI5h8FP5cTtMIvQcu918Adc5JP1DQb/view?usp=share_link

|

||||

|

||||

## University Information

|

||||

**University Name:** Vellore Institute of Technology

|

||||

**Program:** B.Tech in Computer Science Engineering

|

||||

**Year:** 2025

|

||||

**Expected Graduation Date:** July 2025

|

||||

|

||||

## Motivation & Past Experience

|

||||

**1. Have you worked on or contributed to a FOSS project before?**

|

||||

Yes, I have contributed to FOSS project ie APIDash:

|

||||

- Solves Beautify JSON and Highlight JSON - https://github.com/foss42/apidash/pull/595

|

||||

- Share button functionality - https://github.com/foss42/apidash/pull/571#event-16324056931

|

||||

- Homebrew Installation Guide - https://github.com/foss42/apidash/pull/566#event-16282262849

|

||||

- Multiple-Model in DashBot - https://github.com/foss42/apidash/pull/704

|

||||

|

||||

**2. What is your one project/achievement that you are most proud of? Why?**

|

||||

I am most proud of 2 projects particularly one is Parking24 which is an app that was made to solve parking problems of UAE market and i was assigned this project in my internship at Wisho (Now called Hyve AI Labs).

|

||||

I am proud of the project because it is adding real world value and solving a very real problem. Also i have been working on a Machine learning model that is to predict cybersickness using SNN and currently we are achieving accuracy of 87.234% which is better than existing model that has accuracy of 76.11% and we achieved this because of our better approach for data cleaning.

|

||||

|

||||

**3. What kind of problems or challenges motivate you the most to solve them?**

|

||||

I am most motivated by solving complex problems that challenge me to think in new ways and push my boundaries. I enjoy tackling problems that I have never encountered before, as they provide an opportunity to learn, explore innovative solutions, and develop a deeper understanding of different technologies. The thrill of breaking down a difficult problem, analyzing it from different angles, and coming up with an effective solution is what drives me the most.

|

||||

|

||||

**4. Will you be working on GSoC full-time?**

|

||||

Yes, I will be working full-time on my GSoC project.

|

||||

|

||||

**5. Do you mind regularly syncing up with the project mentors?**

|

||||

Not at all! I am happy to have regular sync-ups with my mentors to ensure smooth progress and alignment with project goals.

|

||||

|

||||

**6. What interests you the most about API Dash?**

|

||||

API Dash is an innovative tool that simplifies API testing and monitoring. I personally felt other apps are very bloated and performace is really clunky on the other hand API Dash is really smooth, looks aesthetically pleasing and use of AI just seperates them from rest of the API Testing Platform. I have personally shifted to API Dash for my backend testing. I would love to contribute to API Dash because i feel this is a project that is adding such a great value to developer community.

|

||||

|

||||

**7. Can you mention some areas where the project can be improved?**

|

||||

Some areas where API Dash can be improved include:

|

||||

- **UI for DashBot:** The UI currently is very basic and lacks a professional approach.

|

||||

- **Responses:** The response that is generated by clicking the buttons is on-point but the bot is not conversational enough.

|

||||

|

||||

|

||||

## Project Proposal Information

|

||||

### **Proposal Title:** DashBot

|

||||

|

||||

### **Abstract**

|

||||

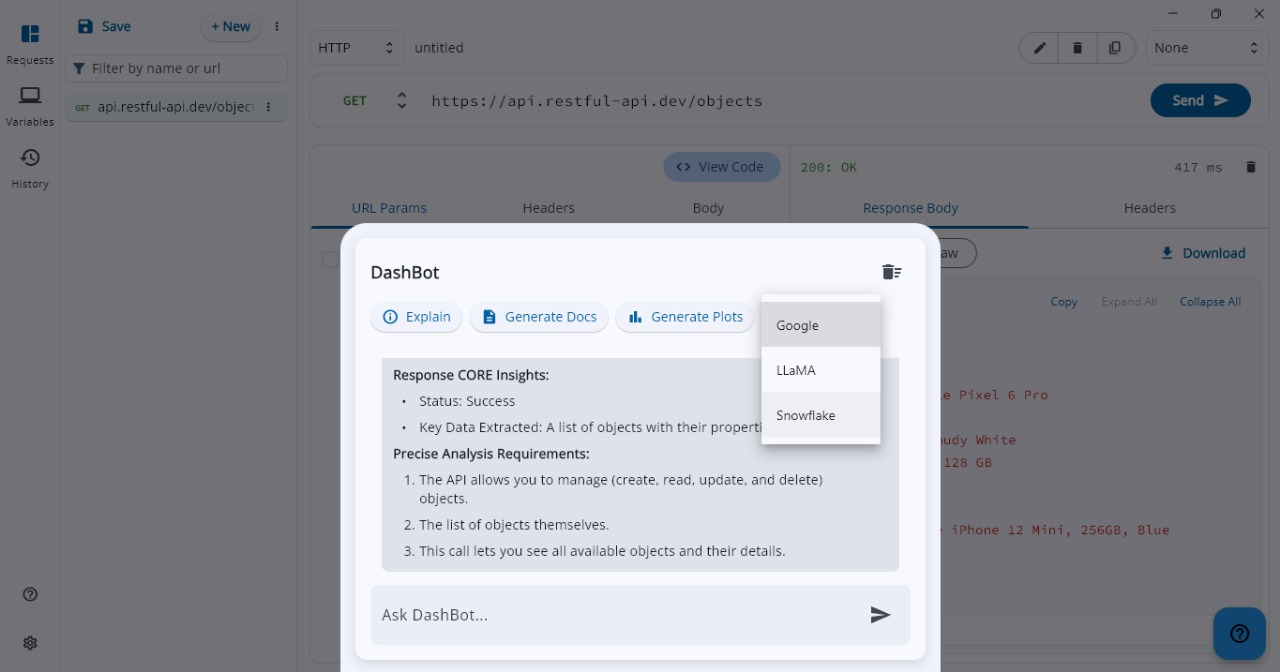

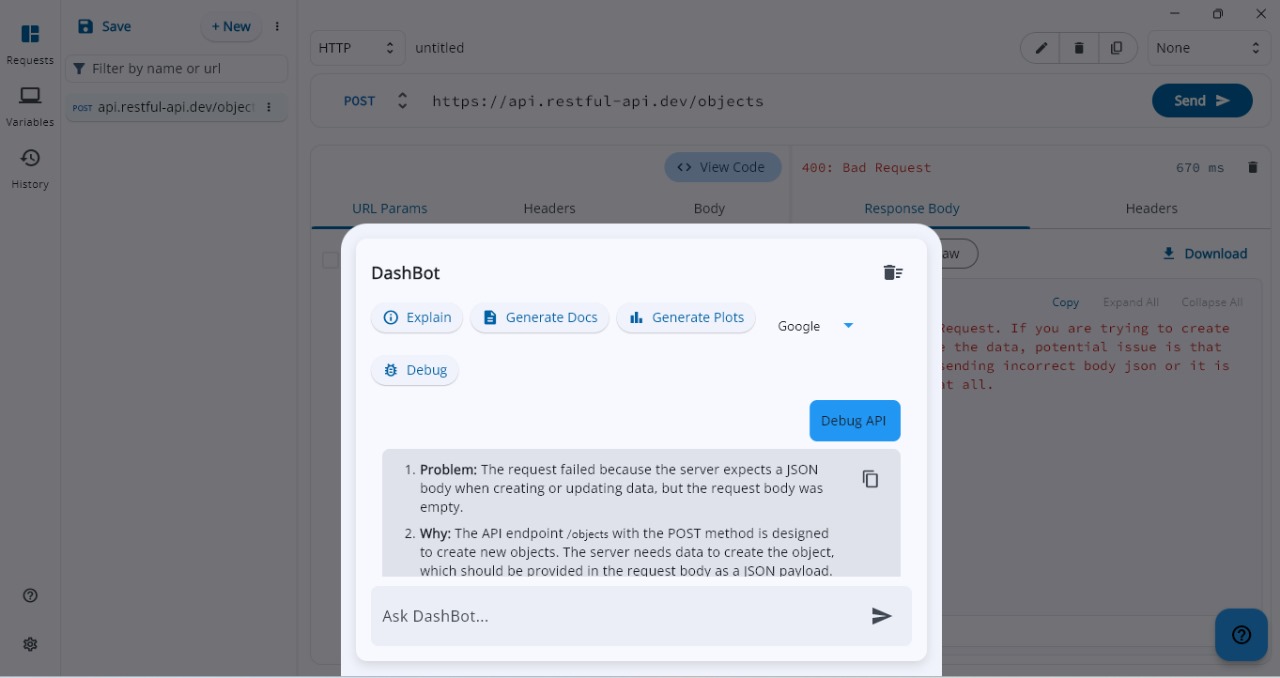

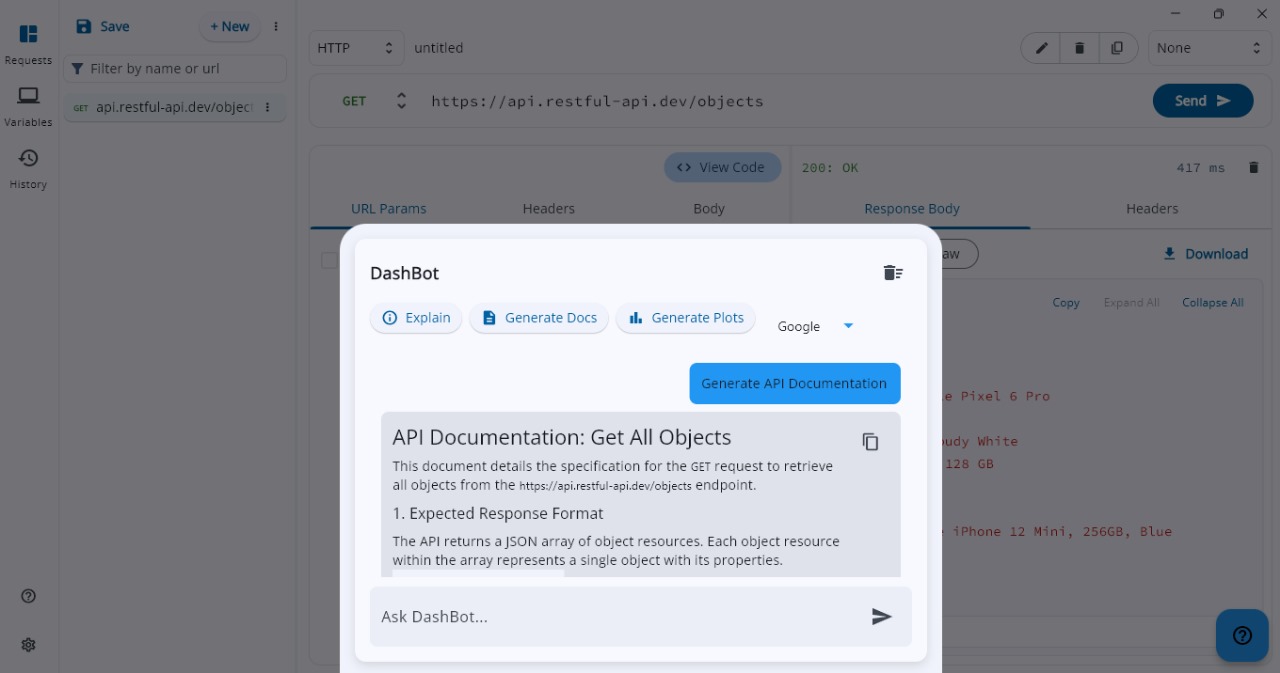

DashBot is an AI-powered assistant designed to supercharge developer productivity within API Dash by automating repetitive tasks, improving API debugging, and providing intelligent recommendations. By leveraging advanced large language models (LLMs), DashBot enables developers to interact with APIs using natural language, making API testing, debugging, documentation, and integration significantly more efficient and intuitive.

|

||||

|

||||

### **Detailed Description**

|

||||

DashBot is designed to be an AI-powered assistant for API Dash that helps developers automate tedious tasks, follow best practices, and obtain contextual suggestions via natural-language input. First we need to finish tasks that are yet to be finished in the first prototype which include:

|

||||

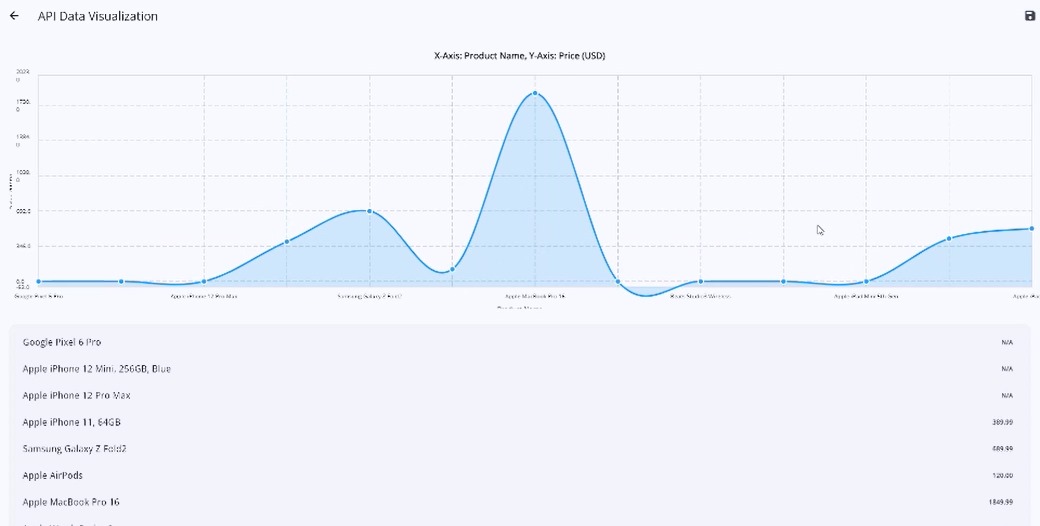

1. Generate plots & visualizations for API responses along with ability to customize

|

||||

2. Generate API integration frontend code for frontend frameworks like React, Flutter, etc.

|

||||

|

||||

This project extends its capabilities by adding the following advanced features:

|

||||

|

||||

#### **1. AI-Powered Code Error Detection & Auto-Fix**

|

||||

- Detect syntax and logical errors in API requests and integration code.

|

||||

- Provide human-readable explanations and suggest one-click fixes.

|

||||

- Ensure best practices in authentication, rate limiting, and error handling.

|

||||

|

||||

#### **2. Multi-Model Support & Fine-Tuning**

|

||||

- Enable users to switch between different LLMs (GPT, Llama, Claude, Gemini, etc.).

|

||||

- Provide on-device LLM support for private inference.

|

||||

- Allow user-defined prompt fine-tuning for personalized suggestions.

|

||||

|

||||

#### **3. Enhanced UI/UX for API Dash**

|

||||

Improve the overall user experience of API Dash by making the interface more intuitive, visually appealing, and developer-friendly.

|

||||

|

||||

- **Modern UI Elements:** Redesigned buttons, input fields, and layouts for a clean and professional look.

|

||||

- **Dark & Light Mode Support:** Seamless theme switcher for better accessibility.

|

||||

- **Improved API Request/Response Visualization:** Better syntax highlighting, collapsible sections, and JSON tree views for responses.

|

||||

- **Enhanced Error Debugging UI:** Clear, structured error messages with AI-powered suggestions for fixes.

|

||||

- **Keyboard Shortcuts & Command Palette:** Faster workflows with keyboard commands.

|

||||

|

||||

#### **4. API Documentation for Tech Stack Integration**

|

||||

- Provide **Step-by-Step Guides** for React, Flutter, Vue, Express.js, and more and change the response according to coding practice used by the user.

|

||||

- Allow **Markdown and PDF export** for easy sharing.

|

||||

|

||||

### **Packages Used**

|

||||

This project will utilize the following packages to implement the proposed features:

|

||||

- [`anthropic_sdk_dart`](https://pub.dev/packages/anthropic_sdk_dart) - Claude integration

|

||||

- [`googleai_dart`](https://pub.dev/packages/googleai_dart) - Google AI model support

|

||||

- [`openai_dart`](https://pub.dev/packages/openai_dart) - OpenAI API access

|

||||

- [`ollama_dart`](https://pub.dev/packages/ollama_dart) - OllamaAI inference

|

||||

- [`fl_chart`](https://pub.dev/packages/fl_chart) - API response visualization

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### **Figma Link**

|

||||

https://www.figma.com/design/vzpQ7xzwwmx2G92VVyF4aw/GSOC-Proposal?node-id=0-1&t=7D6Njm8Rr2x6VCkx-1

|

||||

|

||||

## **Weekly Timeline**

|

||||

|

||||

### **Week 1: Initial Research & Understanding API Dash**

|

||||

**Tasks:**

|

||||

- Study API Dash’s existing codebase.

|

||||

- Research AI assistant implementations.

|

||||

- Set up the development environment.

|

||||

|

||||

### **Week 2: Finalizing Tech Stack & Initial UI Prototyping**

|

||||

**Tasks:**

|

||||

- Decide on AI models & packages to be integrated in DashBot.

|

||||

- Finalize the UI wireframe.

|

||||

- Create a separate development branch for DashBot features.

|

||||

|

||||

### **Week 3-4: UI/UX Enhancements**

|

||||

**Tasks:**

|

||||

- Implement DashBot Panel UI.

|

||||

- Add theme support (light & dark mode).

|

||||

- Improve API response display with syntax highlighting.

|

||||

- Implement API request history tracking and auto-suggestions.

|

||||

|

||||

### **Week 5: Frontend Code Generation**

|

||||

**Tasks:**

|

||||

- Generate API integration code for React, Flutter, Vue.

|

||||

- Allow one-click copy of generated code.

|

||||

-

|

||||

### **Week 6: API Response Visualization**

|

||||

**Tasks:**

|

||||

- Implement data visualization for API responses.

|

||||

- Add customization options for plots.

|

||||

**Deliverable:** Interactive API response visualizations.

|

||||

|

||||

### **Week 7: AI-Powered Code Error Detection**

|

||||

**Tasks:**

|

||||

- Implement AI-powered debugging for API requests.

|

||||

- Ensure API best practices compliance.

|

||||

- Optimize AI model for faster debugging.

|

||||

|

||||

### **Week 8: Multi-Model Support & Fine-Tuning**

|

||||

**Tasks:**

|

||||

- Implement model switching UI.

|

||||

- Connect multiple AI models via API.

|

||||

- Integrate on-device AI support.

|

||||

- Enable custom prompt fine-tuning.

|

||||

|

||||

### **Week 9: Documentation for Integration of API to specific tech-stack**

|

||||

**Tasks:**

|

||||

- Generate API integration documentation for multiple languages (Python, JavaScript, Java, Flutter, etc.).

|

||||

- Create UI for selecting the tech stack and displaying relevant API documentation.

|

||||

|

||||

### **Week 10-11: Testing, Debugging & Optimizations**

|

||||

**Tasks:**

|

||||

- Conduct unit & integration testing.

|

||||

- Fix bugs & optimize performance.

|

||||

- Gather feedback from mentors & community.

|

||||

|

||||

### **Week 12: Documentation, Final Touches & Submission**

|

||||

**Tasks:**

|

||||

- Write detailed documentation & API reference.

|

||||

- Create a demo video & presentation.

|

||||

- Prepare & submit the final report to GSoC.

|

||||

---

|

||||

@ -0,0 +1,375 @@

|

||||

|

||||

# GSoC 2025 Proposal: AI UI Designer for APIs

|

||||

|

||||

## About

|

||||

|

||||

**Full Name**: Ning Wei

|

||||

**Contact Info**: Allenwei0503@gmail.com

|

||||

**Discord Handle**: @allen_wn

|

||||

**GitHub Profile**: [https://github.com/AllenWn](https://github.com/AllenWn)

|

||||

**LinkedIn**: [https://www.linkedin.com/in/ning-wei-allen0503](https://www.linkedin.com/in/ning-wei-allen0503)

|

||||

**Time Zone**: UTC+8

|

||||

**Resume**: https://drive.google.com/file/d/1Zvf1IhKju3rFfnDsBW1WmV40lz0ZMNrD/view?usp=sharing

|

||||

|

||||

## University Info

|

||||

|

||||

**University**: University of Illinois at Urbana-Champaign

|

||||

**Program**: B.S. in Computer Engineering

|

||||

**Year**: 2nd year undergraduate

|

||||

**Expected Graduation**: May 2027

|

||||

|

||||

---

|

||||

|

||||

## Motivation & Past Experience

|

||||

|

||||

1. **Have you worked on or contributed to a FOSS project before?**

|

||||

Not yet officially, but I’ve been actively exploring open source projects like API Dash and contributing via discussion and design planning. I am currently studying the API Dash repository and developer guide to prepare for my first PR.

|

||||

|

||||

2. **What is your one project/achievement that you are most proud of? Why?**

|

||||

I'm proud of building an AI-assisted email management app using Flutter and Go, which automatically categorized and responded to emails using ChatGPT API. It gave me end-to-end experience in integrating APIs, generating dynamic UIs, and designing developer-friendly tools.

|

||||

|

||||

3. **What kind of problems or challenges motivate you the most to solve them?**

|

||||

I enjoy solving problems that eliminate repetitive work for developers and improve workflow productivity — especially through automation and AI integration.

|

||||

|

||||

4. **Will you be working on GSoC full-time?**

|

||||

Yes. I will be dedicating full-time to this project during the summer.

|

||||

|

||||

5. **Do you mind regularly syncing up with the project mentors?**

|

||||

Not at all — I look forward to regular syncs and feedback to align with the project vision.

|

||||

|

||||

6. **What interests you the most about API Dash?**

|

||||

API Dash is focused on improving the developer experience around APIs, which is something I care deeply about. I love the vision of combining UI tools with AI assistance in a privacy-first, extensible way.

|

||||

|

||||

7. **Can you mention some areas where the project can be improved?**

|

||||

- More intelligent code generation from API response types

|

||||

- Drag-and-drop UI workflow

|

||||

- Visual previews and theming customization

|

||||

- Integration with modern LLMs for field-level naming and layout suggestions

|

||||

|

||||

---

|

||||

|

||||

## Project Proposal Information

|

||||

|

||||

### Proposal Title

|

||||

|

||||

AI UI Designer for APIs

|

||||

|

||||

# Relevant Issues: [#617]

|

||||

|

||||

### Abstract

|

||||

|

||||

This project proposes the development of an AI-powered UI generation assistant within the API Dash application. The tool will automatically analyze API responses (primarily in JSON format), infer their structure, and dynamically generate Flutter-based UI components such as tables, forms, or cards. Developers will be able to preview, customize, and export these layouts as usable Dart code. By combining rule-based heuristics with optional LLM (e.g., Ollama, GPT) enhancements, the feature aims to streamline API data visualization and speed up frontend prototyping. The generated UI will be clean, modular, and directly reusable in real-world Flutter applications.

|

||||

|

||||

---

|

||||

|

||||

### Detailed Description

|

||||

|

||||

This project introduces a new feature into API Dash: AI UI Designer — an intelligent assistant that takes an API response and converts it into dynamic UI components, allowing developers to quickly visualize, customize, and export frontend code based on live API data. It will analyze the data and suggest corresponding UI layouts using Dart/Flutter widgets such as `DataTable`, `Card`, or `Form`.

|

||||

|

||||

#### Step 1: Parse API Response Structure

|

||||

|

||||

The first step is to understand the structure of the API response, which is usually in JSON format. The goal is to transform the raw response into an intermediate schema that can guide UI generation.

|

||||

|

||||

- Most API responses are either:

|

||||

- Object: A flat or nested key-value map.

|

||||

- Array of Objects: A list of items, each following a similar structure.

|

||||

- Understanding the structure allows us to decide:

|

||||

- What kind of UI component fits best (e.g., table, form, card).

|

||||

- How many fields to show, and how deep the nesting goes.

|

||||

- Common field types (string, number, boolean, array, object) impact widget selection.

|

||||

- Special patterns (e.g., timestamps, emails, URLs) can be detected and used to enhance UI.

|

||||

|

||||

##### Implementation Plan:

|

||||

|

||||

- Start with JSON

|

||||

- Initially only support JSON input, as it's the most common.

|

||||

- Use Dart's built-in dart:convert package to parse the response.

|

||||

- Build a Recursive Schema Parser

|

||||

- Traverse the JSON response recursively.

|

||||

- For each node (key), determine:

|

||||

- Type: string, number, bool, object, array

|

||||

- Optional metadata (e.g., nullability, format hints)

|

||||

- Depth and parent-child relationships

|

||||

- Output a tree-like structure such as:

|

||||

```json

|

||||

{

|

||||

"type": "object",

|

||||

"fields": [

|

||||

{"key": "name", "type": "string"},

|

||||

{"key": "age", "type": "number"},

|

||||

{"key": "profile", "type": "object", "fields": [...]},

|

||||

{"key": "posts", "type": "array", "itemType": "object", "fields": [...]}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

- Detect Patterns (Optional AI Help)

|

||||

- Apply heuristics or regex to detect:

|

||||

- Timestamps: ISO strings, epoch time

|

||||

- Prices: numeric + currency signs

|

||||

- Boolean flags: isActive, enabled, etc.

|

||||

- This helps in choosing smart widgets (e.g., Switch for booleans).

|

||||

|

||||

- Create a Schema Class

|

||||

- Implement a Dart class (e.g., ParsedSchema) to store this structure.

|

||||

- This class will be passed into the UI generation logic in Step 2.

|

||||

|

||||

- Add Support for Validation

|

||||

- Check if response is malformed or inconsistent (e.g., arrays with mixed types).

|

||||

- If invalid, show fallback UI or error.

|

||||

|

||||

- Future Scope

|

||||

- Add XML support by using XML parsers.

|

||||

- Extend the parser to allow user overrides/custom schema mapping.

|

||||

|

||||

#### Step 2: Design AI Agent Logic

|

||||

|

||||

This step involves designing the core logic that maps the parsed API response schema to corresponding UI components. The AI agent will follow a hybrid approach: combining rule-based mapping with optional LLM-powered enhancement for smarter UI suggestions.

|

||||

|

||||

##### 2.1 Rule-Based Mapping System

|

||||

To ensure fast and consistent results, we will first implement a simple rule-based system that maps specific JSON structures to Flutter widgets. This allows us to generate a basic layout even in environments where LLMs are not available or desirable.

|

||||

|

||||

Example rules:

|

||||

- If the root is an array of objects → generate a DataTable

|

||||

- If the object contains mostly key-value pairs → generate a Card or Form

|

||||

- If fields include timestamps or numeric trends → suggest LineChart

|

||||

- If keys match common patterns like email, phone, price, etc. → render with appropriate widgets (TextField, Dropdown, Currency formatter)

|

||||

|

||||

These mappings will be implemented using Dart classes and can be loaded from a YAML/JSON config file to support extensibility.

|

||||

|

||||

##### 2.2 LLM-Powered Enhancements

|

||||

To go beyond static rules and provide smarter UI suggestions, we will integrate an LLM (e.g., Ollama locally or GPT via API). The LLM will receive the parsed schema and be prompted to:

|

||||

- Suggest the layout structure (vertical list, tabs, grouped cards, etc.)

|

||||

- Label fields more intuitively (e.g., product_id → "Product ID")

|

||||

- Reorder fields based on usage context

|

||||

- Suggest default values, placeholder text, or icons

|

||||

|

||||

Prompt Example:

|

||||

```json

|

||||

{

|

||||

"task": "Generate UI plan for API response",

|

||||

"schema": {

|

||||

"type": "object",

|

||||

"fields": [

|

||||

{"name": "username", "type": "string"},

|

||||

{"name": "email", "type": "string"},

|

||||

{"name": "created_at", "type": "timestamp"}

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Expected LLM output:

|

||||

```json

|

||||

{

|

||||

"layout": "vertical_card",

|

||||

"fields": [

|

||||

{"label": "Username", "widget": "TextField"},

|

||||

{"label": "Email", "widget": "TextField"},

|

||||

{"label": "Signup Date", "widget": "DateDisplay"}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

##### 2.3 Fallback and Configuration

|

||||

- If LLM call fails or is disabled (e.g., offline use), the system falls back to rule-based logic.

|

||||

- The user can toggle LLM mode in settings.

|

||||

- The response from LLM will be cached for repeat inputs to reduce latency and cost.

|

||||

|

||||

##### 2.4 Customization Layer (Optional)

|

||||

After layout generation, users will be able to:

|

||||

- Preview different layout suggestions (from rule-based vs. LLM)

|

||||

- Select a layout and make field-level changes (hide/show, rename, rearrange)

|

||||

- Submit feedback for improving future suggestions (optional)

|

||||

|

||||

#### Step 3: Generate and Render UI in Flutter

|

||||

|

||||

Once the layout plan is decided (via rule-based mapping or LLM suggestion), the system will dynamically generate corresponding Flutter widgets based on the API response structure and content types.

|

||||

|

||||

##### 3.1 Widget Mapping and Construction

|

||||

|

||||

- For each field or group in the parsed schema, we map it to a predefined Flutter widget. Example mappings:

|

||||

- List of Objects → DataTable

|

||||

- Simple key-value object → Card, Column with Text widgets

|

||||

- String fields → TextField (if editable), or SelectableText

|

||||

- Number series over time → Line chart (e.g., using fl_chart package)

|

||||

- The widget structure will be built using standard Dart code with StatefulWidget or StatelessWidget, depending on interactivity.

|

||||

-

|

||||

Implementation Plan:

|

||||

|

||||

- Create a WidgetFactory class that receives a layout plan and schema, and returns a Widget tree.

|

||||

- This factory will follow a clean design pattern to make it testable and modular.

|

||||

- Use Flutter’s json_serializable or custom classes to deserialize API responses into displayable values.

|

||||

|

||||

##### 3.2 Dynamic Rendering in the App

|

||||

|

||||

- The generated widget tree will be rendered in a dedicated “AI UI Preview” pane inside API Dash.

|

||||

- The rendering will be fully dynamic: when the schema or layout changes, the UI preview updates in real time.

|

||||

- This pane will support:

|

||||

- Light customization like toggling fields, reordering, hiding/showing

|

||||

- Live data preview using the actual API response

|

||||

|

||||

Technical Flow:

|

||||

|

||||

- When user clicks "AI UI Designer", a modal or new route opens with the UI preview panel.

|

||||

- This panel will:

|

||||

- Show the raw schema & layout (editable if needed)

|

||||

- Render the widget tree using Flutter's widget system

|

||||

- Any user adjustments will re-trigger the widget regeneration and re-render.

|

||||

|

||||

##### 3.3 Preview and Debugging Tools

|

||||

|

||||

- Add a “Developer Mode” that shows:

|

||||

- Schema tree

|

||||

- Widget mapping details

|

||||

- Generated Dart code (read-only)

|

||||

- This helps with debugging and refining layout logic.

|

||||

|

||||

##### 3.4 Scalability Considerations

|

||||

|

||||

- To keep UI rendering responsive:

|

||||

- Use lazy-loading for large JSON arrays (e.g., scrollable tables)

|

||||

- Avoid deep nesting: limit UI depth or use ExpansionTile for hierarchical views

|

||||

- Support pagination if list is too long

|

||||

|

||||

By the end of this step, users should be able to preview their API response as a fully functional, dynamic UI inside API Dash — without writing a single line of Flutter code.

|

||||

|

||||

#### Step 4: Export UI Code

|

||||

|

||||

Once the user is satisfied with the generated and customized UI layout, the tool should allow them to export the UI as usable Flutter code, so it can be directly reused in their own projects. This step focuses on transforming the dynamic widget tree into clean, readable Dart code and offering convenient export options.

|

||||

|

||||

##### 4.1 Code Generation Pipeline

|

||||

|

||||

To generate Flutter code dynamically, we will:

|

||||

- Traverse the internal widget tree (from Step 3)

|

||||

- For each widget, generate corresponding Dart code using string templates

|

||||

- Example: a DataTable widget will generate its DataTable constructor and children rows

|

||||

- Use indentation and formatting to ensure readability

|

||||

|

||||

Implementation Plan:

|

||||

- Create a CodeGenerator class responsible for converting widget definitions into raw Dart code strings.

|

||||

- Use prebuilt templates for common components: Card, Column, DataTable, etc.

|

||||

- Handle nested widgets recursively to maintain structure.

|

||||

|

||||

##### 4.2 Export Formats

|

||||

|

||||

We will support two export options:

|

||||

1.Raw Dart Code Export

|

||||

- Output the generated Dart code into a text area or preview pane

|

||||

- Allow users to:

|

||||

- Copy to clipboard

|

||||

- Download as .dart file

|

||||

- Highlight syntax for better UX (using a package like highlight)

|

||||

|

||||

2.Optional JSON Layout Export

|

||||

- If we implement a config-driven rendering architecture, offer an export of the layout plan/schema as JSON

|

||||

- Useful for re-importing or using with a visual UI builder

|

||||

|

||||

##### 4.3 Integration into API Dash

|

||||

|

||||

- Add an "Export" button below the UI preview pane

|

||||

- When clicked, the generated code will be shown in a modal or new tab

|

||||

- Provide one-click buttons:

|

||||

- "Copy Code"

|

||||

- "Download Dart File"

|

||||

- (Optional) "Download Layout JSON"

|

||||

|

||||

##### 4.4 Reusability and Developer Focus

|

||||

|

||||

- Ensure that the exported code:

|

||||

- Is clean and idiomatic Dart

|

||||

- Can be copied directly into any Flutter project with minimal edits

|

||||

- Includes basic import statements and class wrappers if needed

|

||||

- Add helpful comments in the generated code (e.g., // This widget was generated from API response)

|

||||

|

||||

##### 4.5 Challenges and Considerations

|

||||

|

||||

- Ensuring valid syntax across nested widgets

|

||||

- Handling edge cases (e.g., empty fields, null values)

|

||||

- Optionally, offer theming/styling presets to match user preferences

|

||||

|

||||

By the end of this step, users can instantly turn live API data into production-ready Flutter UI code, significantly reducing time spent on repetitive frontend scaffolding.

|

||||

|

||||

#### Step 5: Integrate into API Dash

|

||||

|

||||

The final step is to fully integrate the AI UI Designer into the API Dash application, so that users can seamlessly trigger UI generation from real API responses and interact with the entire pipeline — from data to UI preview to export — within the app.

|

||||

|

||||

##### 5.1 Entry Point in UI

|

||||

|

||||

We will add a new button or menu entry labeled “AI UI Designer” within the API response tab (or near the response preview area).

|

||||

|

||||

- When a user executes an API call and gets a JSON response:

|

||||

- A floating action button or contextual menu becomes available

|

||||

- Clicking it opens the AI UI Designer pane

|

||||

|

||||

Implementation Plan:

|

||||

- Extend the existing response panel UI to include a trigger button

|

||||

- Use a showModalBottomSheet() or a full-screen route to launch the designer

|

||||

|

||||

##### 5.2 Internal Architecture and Flow

|

||||

|

||||

The full integration involves multiple coordinated modules:

|

||||

- Trigger UI → (Button click)

|

||||

- JSON Parser Module (from Step 1) → Convert API response to schema

|

||||

- Mapping Logic (Step 2) → Rule-based and/or LLM-assisted UI mapping

|

||||

- Widget Tree Builder (Step 3) → Build live widget layout

|

||||

- Preview + Export UI (Step 4) → Let users customize and extract code

|

||||

|

||||

Each module will be built as a reusable Dart service/class, and all UI logic stays within the API Dash UI tree.

|

||||

|

||||

We’ll keep the architecture modular so the designer logic is isolated and testable.

|

||||

|

||||

##### 5.3 Offline / Privacy-Friendly Support

|

||||

|

||||

Since API Dash is a privacy-first local client, the AI agent should work entirely offline by default using lightweight LLMs such as Ollama, which can run locally.

|

||||

|

||||

- If a user prefers using OpenAI or Anthropic APIs, provide optional settings to configure remote endpoints

|

||||

- Set Ollama as the default backend, and wrap LLM logic inside a service with interchangeable backends

|

||||

|

||||

##### 5.4 User Flow Example

|

||||

|

||||

- User sends API request in API Dash

|

||||

- JSON response is shown

|

||||

- User clicks “AI UI Designer” button

|

||||

- The parsed structure is shown with layout suggestions

|

||||

- User can preview UI, rearrange components, and customize styles

|

||||

- Once satisfied, user clicks “Export”

|

||||

- Dart code is generated and available to copy/download

|

||||

|

||||

##### 5.5 Tests, Documentation & Maintenance

|

||||

|

||||

- Add integration tests to validate:

|

||||

- Triggering and rendering behavior

|

||||

- Correct widget tree output

|

||||

- Export function accuracy

|

||||

- Document:

|

||||

- Each module (parsing, mapping, UI rendering, export)

|

||||

- Developer usage guide (in docs/)

|

||||

- Ensure all new code follows API Dash’s contribution style and linting rules

|

||||

|

||||

By integrating into API Dash cleanly and modularly, this feature becomes a native part of the developer workflow — helping users transform any API into usable UI in seconds, without leaving the app.

|

||||

|

||||

---

|

||||

|

||||

## Weekly Timeline (Tentative)

|

||||

|

||||

| Week | Milestone |

|

||||

|---------------|---------------------------------------------------------------------------------------------|

|

||||

| Community Bonding | Join Discord, introduce myself, understand API Dash architecture, finalize scope with mentors |

|

||||

| Week 1 | Build recursive parser for JSON responses; test on static examples; output schema trees |

|

||||

| Week 2 | Extend parser to handle nested objects, arrays, and basic pattern recognition (e.g., timestamps) |

|

||||

| Week 3 | Implement rule-based schema-to-widget mapper; define mapping logic for tables, cards, forms |

|

||||

| Week 4 | Design widget data model and logic for translating schema into Flutter widget trees |

|

||||

| Week 5 | Develop dynamic Flutter widget generator; render `DataTable`, `Card`, `TextField`, etc. |

|

||||

| Week 6 | Build basic UI preview pane inside API Dash with user interaction support (e.g., toggles) |

|

||||

| Week 7 (Midterm Evaluation) | Submit code with parser + rule-based mapping + preview UI; receive mentor feedback |

|

||||

| Week 8 | Add layout customization features: visibility toggles, reordering, field labels |

|

||||

| Week 9 | Integrate basic Ollama-based LLM agent for field naming & layout suggestion |

|

||||

| Week 10 | Abstract LLM backend to support GPT/Anthropic alternatives via API config |

|

||||

| Week 11 | Implement code export: generate Dart source code, copy-to-clipboard & download options |

|

||||

| Week 12 | Optional: add JSON config export; polish UX and improve error handling |

|

||||

| Week 13 | Write documentation, developer setup guide, internal tests for each module |

|

||||

| Week 14 (Final Evaluation) | Final review, cleanup, feedback response, and submission |

|

||||

|

||||

Thanks again for your time and guidance. I’ve already started studying the API Dash codebase and developer guide, and I’d love your feedback on this plan — does it align with your vision?

|

||||

If selected, I’m excited to implement this project. If this idea is already taken, I’m open to switching to another API Dash project that fits my background.

|

||||

@ -0,0 +1,76 @@

|

||||

### About

|

||||

|

||||

1. **Full Name**: Sunil Kumar Sharma

|

||||

2. **Contact Info**: sharma.sunil12527@gmail.com, +91 8979696414

|

||||

3. **Discord User ID**: AZURE (502613458638995456)

|

||||

4. **GitHub Handle**: https://github.com/Azur3-bit

|

||||

5. **Socials**: https://www.linkedin.com/in/sunil-sharma-206871205/

|

||||

6. **Time Zone**: GMT +5:30 (India)

|

||||

7. **Resume**: https://drive.google.com/file/d/1B3ixbrlPwwCfFw8Lcq3LXvW3N5dWmviX/view?usp=sharing

|

||||

|

||||

### University Info

|

||||

|

||||

1. **University Name**: SRM Institute of Science and Technology

|

||||

2. **Program**: B.Tech in Computer Science & Engineering

|

||||

3. **Year**: 4th (Final Year)

|

||||

4. **Expected Graduation Date**: June 2025

|

||||

|

||||

### Motivation & Past Experience

|

||||

|

||||

1. **Have you worked on or contributed to a FOSS project before?**

|

||||

Yes, I have actively contributed to open-source projects, including adding support for PHP, Rust, and Golang, improving UI elements, and enhancing test coverage for various repositories. Some of my notable contributions:

|

||||

- Added support for PHP, Rust, and Golang in an online compiler.

|

||||

- Improved UI/UX for an online coding platform.

|

||||

- Introduced a Python script for OpenAI key validation.

|

||||

- Link to relevant PR: https://github.com/kalviumcommunity/compilerd/pull/139

|

||||

While my PR was not merged, it was due to a shift in project priorities, and the maintainers appreciated my effort and provided constructive feedback, which helped me refine my contributions.

|

||||

|

||||

2. **What is your one project/achievement that you are most proud of? Why?**

|

||||

One of my proudest achievements is my project on **Self-Optimizing and Intelligent Cloud Infrastructure**. This system integrates AWS Predictive Auto-Scaling with CloudWatch monitoring and cost optimization techniques, reducing infrastructure costs by ₹766.82 per month. This project showcases my expertise in **cloud computing, automation, and cost optimization** while making real-world impact.

|

||||

|

||||

3. **What kind of problems or challenges motivate you the most to solve them?**

|

||||

I like working on problems that push me to improve efficiency, enhance security, and automate complex processes. Challenges in **API authentication, cloud infrastructure, and scalable systems** interest me the most because they require a balance of security, optimization, and real-world application.

|

||||

|

||||

4. **Will you be working on GSoC full-time?**

|

||||

Yes, I will be working full-time on my GSoC project.

|

||||

|

||||

5. **Do you mind regularly syncing up with the project mentors?**

|

||||

Not at all! Regular sync-ups will help ensure alignment with project goals and continuous improvement.

|

||||

|

||||

6. **What interests you the most about API Dash?**

|

||||

API Dash is a lightweight and efficient API testing tool that avoids the unnecessary complexity of other platforms. I like how it keeps things simple while integrating AI to make API testing more intuitive and developer-friendly.

|

||||

|

||||

7. **Can you mention some areas where the project can be improved?**

|

||||

- **Authentication Mechanisms**: Implementing **Multi-Factor Authentication (MFA)**, including biometric authentication, will enhance security and improve user experience. Having worked on MFA in payment gateways, I can integrate fingerprint recognition to streamline authentication, reducing reliance on passwords while ensuring security. Secure storage will protect credentials, allowing seamless and fast authentication for valid users on both mobile and laptop platforms.

|

||||

|

||||

### Project Proposal Information

|

||||

|

||||

#### Proposal Title: **Enhancing API Authentication & Secure Storage in API Dash**

|

||||

|

||||

#### Abstract

|

||||

This project aims to **implement secure storage for authentication tokens using Flutter Secure Storage and integrate biometric authentication** for an added layer of security. The goal is to **enhance security while keeping API Dash lightweight and user-friendly**.

|

||||

|

||||

|

||||

image : (doc/proposals/2025/gsoc/images/sunil Auth image.png)

|

||||

|

||||

#### Detailed Description

|

||||

|

||||

| Feature | Description |

|

||||

|---------|------------|

|

||||

| **Secure Token Storage** | Implement **Flutter Secure Storage** to securely store authentication tokens in an encrypted format. |

|

||||

| **Biometric Authentication** | Enable **fingerprint unlock** for accessing stored API credentials. |

|

||||

| **Improved UI for Authentication Management** | Add an intuitive UI for managing saved authentication methods securely. |

|

||||

| **Multiple Authentication Methods** | Ensure seamless support for Basic Auth, API Key, JWT, OAuth 1.0, OAuth 2.0, and Digest Authentication. |

|

||||

| **Efficient Request Handling** | Ensure secure storage integration does not affect API request efficiency. |

|

||||

|

||||

#### Weekly Timeline

|

||||

|

||||

| Week | Tasks |

|

||||

|------|-------|

|

||||

| **Week 1** | Study API Dash's authentication mechanisms and security vulnerabilities. Set up the development environment. |

|

||||

| **Week 2** | Implement **Flutter Secure Storage** for encrypted token storage. |

|

||||

| **Week 3-5** | Integrate **Biometric Authentication** for secure access to stored credentials. Improve UI for managing authentication credentials securely. |

|

||||

| **Week 6-9** | Implement and test multiple authentication methods (Basic Auth, API Key, JWT, OAuth, Digest Auth) with secure storage. Ensure **efficient API request handling** with secure storage integration. |

|

||||

| **Week 10** | Optimize performance and conduct security audits for token storage. |

|

||||

| **Week 11** | Improve documentation for secure authentication management in API Dash. |

|

||||

| **Week 12** | Conduct thorough testing, debugging, and security validation. Prepare the final report, demo, and submit the project. |

|

||||

@ -0,0 +1,342 @@

|

||||

### GSoC Proposal

|

||||

|

||||

## About

|

||||

|

||||

**Full Name**: Abhinav Sharma

|

||||

|

||||

**Contact Info**: abhinavs1920bpl@gmail.com, +91 9479960041

|

||||

|

||||

**Discord Handle**: abhinavs1920

|

||||

|

||||

**GitHub Profile**: [github.com/abhinavs1920](https://github.com/abhinavs1920)

|

||||

|

||||

**Twitter, LinkedIn, Other Socials**: [linkedin.com/in/abhinavs1920](https://linkedin.com/in/abhinavs1920)

|

||||

|

||||

**Time Zone**: Indian Standard Time (IST)

|

||||

|

||||

**Resume**: [https://bit.ly/4iEPEkZ](https://bit.ly/4iEPEkZ)

|

||||

|

||||

## University Info

|

||||

|

||||

**University Name**: ABV-Indian Institute of Information Technology and Management, Gwalior

|

||||

|

||||

**Program**: Btech. Information Technology

|

||||

|

||||

**Year**: 3rd Year

|

||||

|

||||

**Expected Graduation Date**: May 2026

|

||||

|

||||

## Motivation & Past Experience

|

||||

|

||||

**Have you worked on or contributed to a FOSS project before?**

|

||||

Yes, I have contributed to various FOSS projects, including APIDash. Here are some relevant PRs and repository links:

|

||||

- [Feature that allows users to configure and use a proxy for their HTTP requests within the API Dash](https://github.com/foss42/apidash/pull/544)

|

||||

- [Support for running multiple API requests in parallel](https://github.com/foss42/apidash/pull/734)

|

||||

- [Support for fetching environment variables directly from the OS in API Dash](https://github.com/foss42/apidash/pull/662)

|

||||

- [Implementation of in-app update check feature for APIDash](https://github.com/foss42/apidash/pull/660)

|

||||

- [Updated the .env.template to use SERVER_CLIENT_ID instead of GOOGLE_SIGNIN_ANDROID_CLIENT_ID](https://github.com/AOSSIE-Org/Monumento/pull/229)

|

||||

- [Addressed the issue of unhandled exceptions when loading environment variables from a .env file](https://github.com/AOSSIE-Org/Monumento/pull/215)

|

||||

- [Dependency upgradation in Talawa](https://github.com/PalisadoesFoundation/talawa/pull/2353)

|

||||

|

||||

**What is your one project/achievement that you are most proud of? Why?**

|

||||

The project I'm most proud of is MapIt, a location-based note-taking app I built using Flutter. It allows users to create notes, tag locations using Google Maps, and set reminders with notifications. What makes this project special is that it combines multiple advanced features like Google Sign-In, Geofencing, Background Notification Service, and Battery Optimization to ensure a smooth user experience.

|

||||

One of the most challenging yet rewarding aspects was implementing background services efficiently, ensuring notifications and location tracking worked even when the app was closed, without draining the battery. I also optimized the Isolates to handle background tasks asynchronously, improving app performance.

|

||||

This project pushed me to learn state management, background execution, and efficient API handling. Seeing it come together and solving real-world problems with it made me really proud.

|

||||

|

||||

**What kind of problems or challenges motivate you the most to solve them?**

|

||||

I love working with Flutter and creating automation solutions for real-world problems that help users worldwide. The ability to build applications that streamline workflows, reduce manual effort, and enhance user experience excites me the most. I strive to solve practical problems that users face daily by developing scalable and user-friendly applications. My passion lies in integrating different technologies, optimizing performance, and refining processes to improve developer productivity. Whether it's automating API testing, optimizing background processes, or designing intuitive UI/UX solutions, I always aim to create impactful applications that make a difference.

|

||||

|

||||

**Will you be working on GSoC full-time?**

|

||||

Yes, I will be dedicating my full time to GSoC.

|

||||

|

||||

**Do you mind regularly syncing up with the project mentors?**

|

||||

Not at all, I am comfortable with regular sync-ups.

|

||||

|

||||

**What interests you the most about API Dash?**

|

||||

The ability to create automated API workflows excites me. I am particularly interested in designing an intuitive UI/UX for API testing and workflow automation. API Dash is built using Flutter, a lightweight, multi-platform framework that ensures smooth performance across different operating systems. With frequent updates and contributions from a dedicated open-source community, API Dash evolves continuously to meet developer needs. It offers a seamless alternative to API testing tools like Postman, focusing on simplicity, efficiency, and scalability. Being part of a project with an active and growing contributor base excites me, as it presents opportunities to improve developer workflows and enhance automation capabilities in API testing.

|

||||

|

||||

**Can you mention some areas where the project can be improved?**

|

||||

- Enhanced error handling mechanisms in API requests.

|

||||

- More detailed analytics and logging for API testing.

|

||||

- Ability to export and share API test results easily.

|

||||

- Sync API test cases using authentication providers such as OAuth, Google Sign-In, and GitHub authentication.

|

||||

- Implement a search bar for different features, improving navigation and usability.

|

||||

- Expand the Testing Suite with additional validation mechanisms.

|

||||

- Enhance the Workflow Builder with better API chaining and conditional logic.

|

||||

- Improve the Collection Runner with parallel execution and scheduled runs.

|

||||

- Implement an API Monitor with real-time tracking and alert notifications.

|

||||

|

||||

## Proposal Title

|

||||

|

||||

**Enhancing API Testing Suite in APIDash: Collection Runner, Workflow Builder & Monitoring**

|

||||

|

||||

## Abstract

|

||||

|

||||

APIDash is an open-source lightweight API testing and management tool built using Flutter. In this project, I will extend its capabilities by integrating an advanced API Testing Suite, featuring:

|

||||

|

||||

- **Collection Runner** – I plan to implement the ability to execute batch API requests sequentially or in parallel.

|

||||

|

||||

- **Workflow Builder** – I aim to create a drag-and-drop UI for creating API request workflows with conditional logic.

|

||||

|

||||

- **API Monitoring & Analytics** – I will develop real-time monitoring, failure alerts, and execution insights.

|

||||

|

||||

By integrating these features, I will enhance APIDash to provide an end-to-end API testing solution, improving efficiency and usability for developers.

|

||||

|

||||

## Detailed Description

|

||||

|

||||

### Key Features:

|

||||

|

||||

- **API Validation Testing**: I intend to implement schema validation and assertion checks.

|

||||

- **Integration Testing**: I need to ensure seamless interactions between APIs.

|

||||

- **Security Testing**: I plan to develop features to identify vulnerabilities & secure endpoints, along with detection of security breaches like script injection.

|

||||

- **Performance Testing**: I aim to create tools to measure API performance under load.

|

||||

- **Scalability Testing**: I will implement features to ensure APIs scale efficiently.

|

||||

- **Collection Runner**: I plan to develop functionality to automate batch API executions, along with variable data payload sending ability.

|

||||

- **Workflow Builder**: I aim to create a drag-and-drop API request chaining interface.

|

||||

- **Monitoring System**: I will implement a system to track API responses and errors.

|

||||

- **Sync with authentication providers**: I plan to integrate authentication services for seamless API test management.

|

||||

- **Implement a global search bar**: I aim to add functionality for quick navigation and feature discovery.

|

||||

|

||||

**AI-Enhanced Features (if time permits):**

|

||||

|

||||

- **AI-Assisted Test Case Generation**: I plan to develop functionality to automatically generate diverse and random API test cases by analyzing historical API data or API documentation, ensuring extensive coverage of edge cases.

|

||||

|

||||

- **Predictive Performance Optimization**: I aim to implement predictive analytics to forecast API load impacts and dynamically adjust test parameters for optimal performance.

|

||||

|

||||

### Tech Stack:

|

||||

|

||||

- **Frontend**: Flutter (Dart)

|

||||

- **State Management**: Riverpod, Provider

|

||||

- **Backend**: Firebase or Node.js/Golang (if required for logging and monitoring)

|

||||

- **Testing Framework**: Postman alternatives, automated test scripts

|

||||

- **AI-Model**: Gemini or similar (If Required)

|

||||

|

||||

|

||||

|

||||

### Integration with APIDash Architecture

|

||||

|

||||

The proposed features will integrate seamlessly with APIDash's existing architecture:

|

||||

|

||||

**State Management**: I plan to use APIDash's Riverpod-based state management pattern by creating new providers for Collection Runner and Workflow states, following patterns in existing files like `lib/providers/collection_state_provider.dart` and `lib/providers/selected_request_provider.dart`.

|

||||

|

||||

**Data Persistence**: I aim to use APIDash's Hive implementation with new type adapters for workflow models and test results, maintaining the existing pattern in `lib/services/local_db_service.dart`.

|

||||

|

||||

**UI Components**: I will build on the APIDash Design System components, ensuring consistent styling by extending widgets from `apidash_design_system` package. New UI elements will follow the existing Material Design implementation.

|

||||

|

||||

**Cross-Platform Support**: I plan to ensure all new features work across desktop, mobile, and web platforms by avoiding platform-specific code and using Flutter's responsive design patterns to adapt layouts for different screen sizes.

|

||||

|

||||

## Project Plan for Enhancing APIDash

|

||||

|

||||

### Phase 1: Research & Planning (Weeks 1-2)

|

||||

**Objective:**

|

||||

My first task will be to analyze the existing APIDash codebase, identify integration points, and finalize the technical approach.

|

||||

|

||||

#### Week 1: Codebase Analysis & Technical Research

|

||||

**Codebase Understanding:**

|

||||

- I plan to thoroughly analyze APIDash's architecture, including request execution, storage, and UI components.

|

||||

- My goal is to identify reusable components to minimize redundant implementation.

|

||||

|

||||

**Integration Points Identification:**

|

||||

- It's essential for me to locate areas where new features (API collection management, batch execution, workflow automation) can be integrated.

|

||||

- I need to identify dependencies and potential conflicts with existing modules.

|

||||

|

||||

#### Week 2: UI/UX Planning & Tech Stack Finalization

|

||||

**Technical Approach Finalization:**

|

||||

- I'm going to define data structures for API collections and workflows.

|

||||

- My approach includes evaluating local storage solutions (such as SQLite or Hive) versus cloud-based options, considering potential future expansion to cloud synchronization.

|

||||

- I need to carefully assess appropriate tools for batch execution, such as threading, isolates, or queue-based execution. The current approach involves utilizing isolates and Future.then() for concurrent API testing. Please see PR [Stress Testing](https://github.com/foss42/apidash/pull/734)

|

||||

|

||||

**UI/UX Design:**

|

||||

- I'll be creating wireframes for new UI components like API collection manager, workflow builder, and test suite dashboards.

|

||||

- My designs must align with APIDash's existing UI framework.

|

||||

|

||||

### Phase 2: Enhancing API Collection Management (Weeks 3-4)

|

||||

**Objective:**

|

||||